Conoce la Facultad de Informática de la UPV/EHU

El centro de referencia en la formación y conocimiento técnico/científico en informática e inteligencia artificial.

El centro de referencia en la formación y conocimiento técnico/científico en informática e inteligencia artificial.

Fecha de primera publicación: 10/07/2024

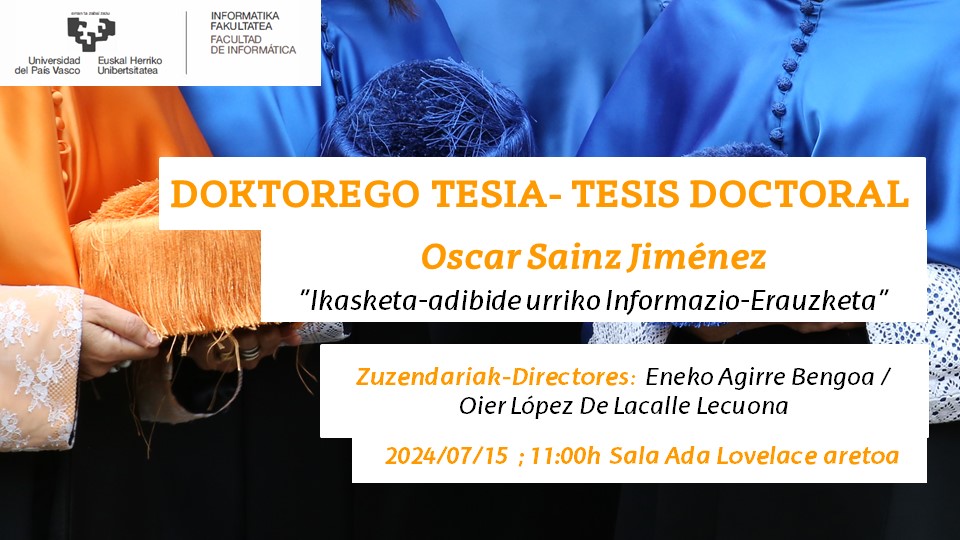

Autor: Oscar Sainz Jiménez

Tesis: "Ikasketa-adibide urriko Informazio-Erauzketa"

Directores: Eneko Agirre Bengoa / Oier López de Lacalle Lekuona

Fecha: 15 de julio de 2024

Hora: 11:00h

Lugar: Ada Lovelace aretoa

Abstract:

"The field of Information Extraction (IE) allows machines to identify and categorize relevant information that appears in the text, a task that is considered challenging even for humans. Recent advances in the field leverage Machine Learning models trained on large amounts of carefully curated data. However, annotating large corpora is laborious and time-consuming, which can become prohibitive in low-resource scenarios.

The goal of this thesis is to explore and develop IE methods that work on low-resource scenarios. Particularly, exploiting the generalization capabilities of Language Models (LM), and how they allow knowledge transfer between high-resource and low-resource scenarios. The thesis is comprised of two main parts. Briefly, in the first part, we have developed a Zero- and Few-shot Information Extractor using encoder-only Language Models. For the second part, we have jumped to Large Language Models and addressed some limitations of our previous approach.

More in detail, in order to develop a Zero- and Few-shot Information Extractor it is necessary to bypass the need for large amounts of schema-dependant annotated data. Recent advances in the field have shown that many tasks can be reformulated as natural language or as other high-resource tasks, and be solved by pre-trained Language Models. In this thesis, we propose the use of Textual Entailment as an intermediate task for Information Extraction. By reformulating the tasks as Textual Entailment, the model is no longer tied to a specific schema, allowing it to generalize and work as a zero-shot information extractor out of the box. We have shown that this approach can achieve zero-shot results similar to supervised systems from a few years ago. Moreover, the model can be further trained with examples of the task, achieving results close to the supervised state-of-the-art. Removing the schema dependency allows the model to be trained on multiple schemas —different datasets— at the same time. We have shown that for datasets from similar tasks, a model trained on one schema can transfer the knowledge to another schema, improving the zero-shot performance and further reducing the gap with supervised systems.

Reformulating Information Extraction tasks as Textual Entailment requires some manual work, the instances of the task need to be converted to premise-hypothesis pairs. To that end, manually created templates are used to generate the hypotheses, which we call verbalizations. As part of this thesis, we have shown that the effort of creating verbalizations is significantly lower than the effort of creating annotations for the same task, yielding better performance for the same amount of work. We have also shown that different experts can make the verbalizations —with different styles— and still perform similarly. Based on these results, we have proposed a new workflow —verbalize while defining— which we compare with traditional define, annotate, and train workflows. This workflow allows novice users to model complex IE schemas with strong zero-shot performance that later can be curated by expert annotators with much less effort. We have developed a practical demonstration of the proposed workflow.

For the last part of this thesis, we analyzed and addressed the limitations of the Textual Entailment approach. We leveraged the progress carried out by decoder-only LLMs and implemented GoLLIE: an LLM capable of following annotation guidelines to perform IE annotations. Different from the Textual Entailment approach, GoLLIE leverages detailed guidelines —thanks to the longer context window— instead of simple verbalizations, allowing the model to follow more fine-grained instructions. Additionally, we provided an error analysis and future research directions for the field."