Arina is composed of 2 subclusters: kalk2020 and kalk2017-katramila

As a whole, Arina features 147 nodes, which provide 196 multicore processors (CPUs) containing a total amount of 4396 cores.

Arina is equiped with two high performance file systems which provide a storage with total net capacity of 120 TB.

Within each subcluster the nodes are connected to each other via an Infiniband network characterized by high bandwith and low latency for intranode communications.

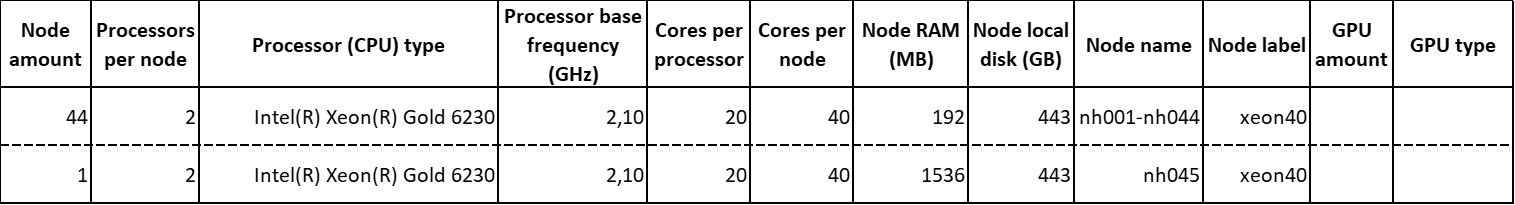

Subcluster kalk2020

The sublcluster kalk2020 features the following computing nodes:

In total, this set of nodes is composed of 45 computing nodes, which provide 90 multicore processors containing a total amount of 1800 cores.

This subcluster is endowed with a Parallel Cluster File System (BeeGFS) with a net capacity of 80 TB. Its storage is shared across the entire kalk2020 node compound.

The SLURM queue system is devoted to the management of the jobs submitted to kalk2020.

The intranode communication is speeded up thanks to an infiniband network with a transfer speed up to 100 Gb/s (EDR).

Subcluster kalk2017-katramila

This subcluster includes two sets of computing nodes, namely kalk2017 and katramila.

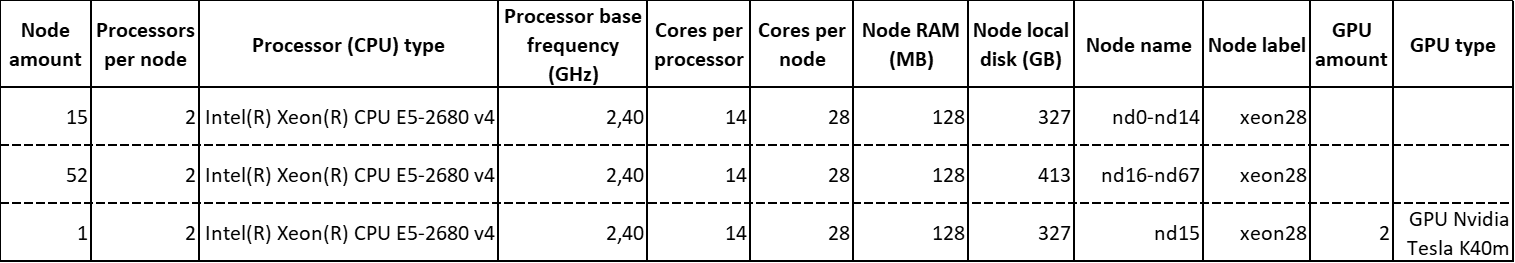

The kalk2017 node compound is composed of:

In total, it features 68 computing nodes, which provide 136 multicore processors containing a total amount of 1904 cores.

Each GPU Nvidia Tesla K40m features 2880 GPU cores and 12 GB of integrated GPU RAM.

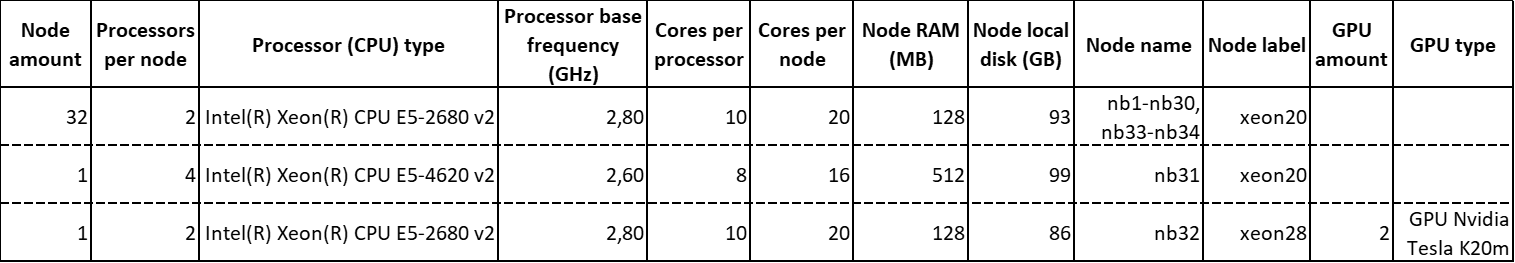

The katramila node compound is composed of:

In total, it features 34 computing nodes, which provide 70 multicore processors containing a total amount of 692 cores.

Each GPU Nvidia Tesla K20m features 2496 GPU cores and 5 GB of integrated GPU RAM.

These two sets of computing nodes share a Parallel Cluster File System (Lustre) with a net capacity of 40 TB. Hence, Its storage is shared across the entire node compound of both kalk2017 and katramila.

The TORQUE/MAUI queue system is devoted to the management of the jobs submitted to kalk2017-katramila. Unless otherwise specified in the job submission, the queue system automatically sends jobs to either kalk2017 or katramila computing nodes upon node availability and requested resources.

The intranode communication is speeded up thanks to an infiniband network with a transfer speed up to 56 Gb/s (FDR).