Ezagutu UPV/EHUko Informatika Fakultatea

Fakultatea da erreferentziazko ikastegia informatikako eta adimen artifizialeko prestakuntza eta ezagutza teknikoa/zientifikoa jasotzeko.

Fakultatea da erreferentziazko ikastegia informatikako eta adimen artifizialeko prestakuntza eta ezagutza teknikoa/zientifikoa jasotzeko.

First publication date: 11/10/2024

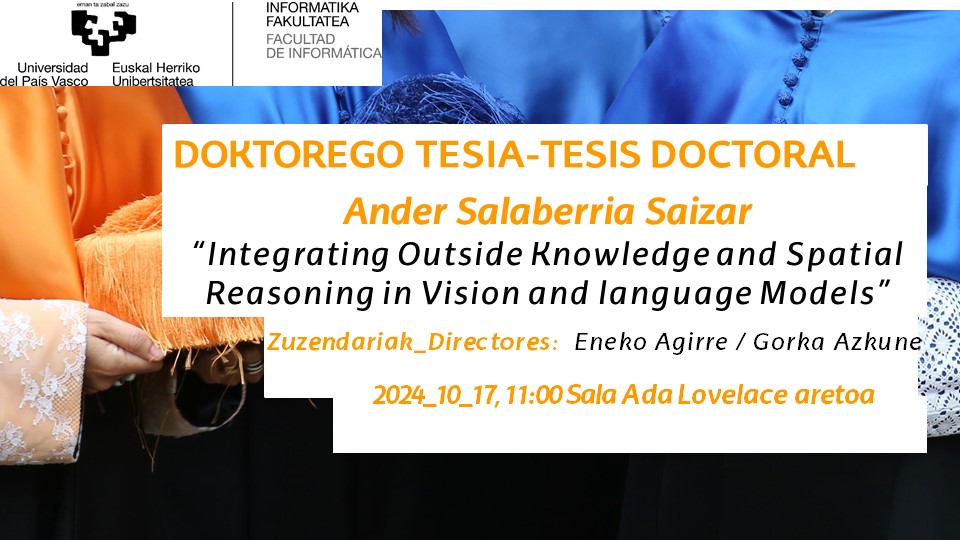

Author: Ander Salaberria Saizar

Thesis: "Integrating Outside Knowledge and Spatial Reasoning in Vision and language Models"

Directors: Eneko Agirre Bengoa / Gorka Azcune Galparsoro

Day: 2024ko urriaren 17an

Time: 11:00h

Place: Ada Lovelace aretoa (Informatikako fakultatea)

Abstract:

"The fields of natural language processing (NLP) and computer vision (CV) have lately emerged thanks to recent advancements in computational power, data quantity, and an evergrowing research community. The bridge between NLP and CV has also advanced, particularly in tasks requiring the grounding of textual and visual modalities, such as visual question-answering (VQA) and text-to-image generation. This paves the way for more sophisticated systems and applications across various domains. Nevertheless, these systems still face weaknesses that have no trivial solution.

The goal of this thesis is to explore two limitations of current Vision-and-language models (VLMs): world knowledge integration and spatial reasoning. This dissertation can be divided into two main parts, one for each limitation that we tackle. In the first part, we verbalize images to better leverage world knowledge that is implicitly encoded in language models. In contrast, in the second, we exploit the generation of synthetic data from object annotations to aid the spatial reasoning of both language models and text-to-image generators.

More in-depth, visio-linguistic tasks, such as VQA, usually need to reason over an image by integrating world knowledge. As previous work has shown that pre-trained language models encode this knowledge, we propose an unimodal (text-only) approach by generating captions from images automatically and discarding the image from the rest of the inference. We show that using only textual representations to encode the language model's input is especially effective for VQA tasks requiring external knowledge. In addition, we show that our unimodal approach outperforms VLMs of a comparable number of parameters, while we also observe that both approaches are complementary regardless of the need for world knowledge. Our qualitative analysis reveals that automatic captions often fail to capture the information needed to answer the prompted question, which seems to be balanced by the better inference ability of our unimodal model.

Entering the field of spatial reasoning, we show that text-only language models can learn to ground spatial relations (left of or below) if they are provided with explicit object locations and they are properly trained to leverage them. We feed this spatial knowledge by using location tokens that represent bounding box information, which are extracted using an off-the-shelf object detector. In order to learn how to link each spatial relation to different sets of location tokens, we define simple heuristics that specify whether a given relation is fulfilled or not, and we use that signal to build a synthetic dataset and fine-tune language models. By doing so, we set the new state-of-the-art for the VSR dataset, even improving the performance of VLMs. Our analysis shows that our text-only language models can generalize beyond the relations seen during training to some extent, learning also more useful information than that encoded in the heuristics mentioned earlier.

We also tackle the task of text-to-image generation by following a similar approach. We hypothesize that current systems do not accurately depict spatial relations in generated images due to the lack of them in the training data. Therefore, we introduce the Spatial Relation for Generation (SR4G) dataset, which contains: synthetic captions composed of 14 different explicit spatial relations, 9 million image-caption pairs for training, and more than 60 thousand captions for evaluation. We also provide an unseen split in order to test generalization, with different sets of objects used during training, development and testing. We show that fine-tuning two different Stable Diffusion models (denoted as SD_SR4G) yields significant improvements in the VISOR metric, an evaluation metric specifically designed to check whether an image contains a specific spatial relation or not. The improvement holds in the unseen split, showing that SD_SR4G is able to generalize to unseen objects. This way, we improve the state-of-the-art with fewer parameters and avoid complex architectures involving layout generation and large language models.

"